Tranfering Adversarial Perturbation

Deep learning models have been shown to perform extremely well for many image based tasks. Such tasks include image classification, image generation, and image caption generation, etc. For such tasks deep learning methods have shown to understand the information present in images and make an intelligent decision.

A lot of what such models learn is dependent on the given data set. Is it actually possible to enhance information present in the data by adding perturbation and noise to the data? We are interested to find a possible answer for this question.

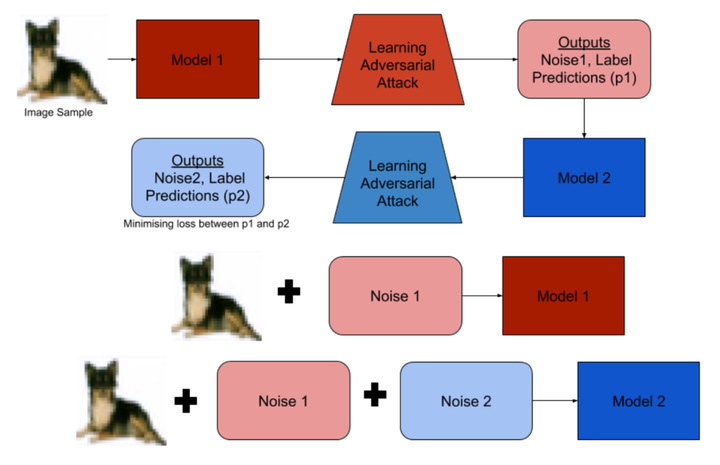

We propose an algorithm for transferring of data perturbation across different models and domains. We are interested in transferring adversarial data perturbations as attacks on the model

- Transfer learning to learn perturbations for a model. Adapting noise learned for one model, to another model.

- Maintaining the visual appearance of data samples after transferring perturbations.

- Adapting noise with just one iteration of the dataset or 1-epoch (this reduces the computational complexity)