Monocular SLAM

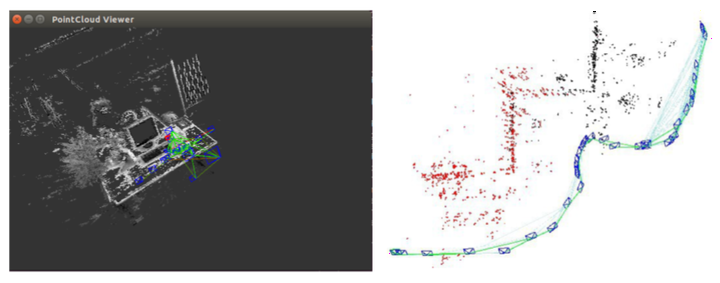

Simultaneous location and mapping(SLAM) is the problem of creating a map of an unknown environment and simultaneously tracking agent’s location. Concepts of epipolar geometry give multi-view solution for this problem. Monocular SLAM is performing this task with a single camera. As simple hardware can be utilized, this reduces cost and hardware complexity. Multiple algorithms and benchmark datasets have been developed for studying this problem. Application of Monocular SLAM extend to domains like robotics, entertainment augmented reality), telepresence and more. We explore various monocular SLAM algorithms: Parallel Tracking and Mapping(PTAM), Large-Scale Direct Monocular(LSD)SLAM and ORB-SLAM. Initial Proposal: We would focus on evaluating performance on benchmark datasets and analyzing the results. We plan to use the algorithm with best performance on the benchmark dataset, on real-time data. Updated proposal (after feedback): We would focus on analyzing the results of our algorithm on varying sequences (chosen from a benchmark dataset: TUM RGB-D so as to allow enough variations - we only use the RGB component of the data) and understand the successes and failure cases of our model.